For the most part, I really like the C++ language. That said, I also have a small list of things that I wish had been done differently. For years, enumerations have been at the top of that list. Enums have a couple of distinct problems that make them troublesome, and while there are techniques to mitigate some of their issues, they still remain fundamentally flawed.

Thankfully, C++11 added scoped enums (or “strongly-typed” enums), which address these problems head-on. In my opinion, the best part about scoped enums is that the new syntax is intuitive and feels natural to the C++ language.

In an effort to build a case for why scoped enums are superior, we will first discuss the aforementioned deficiencies of their unscoped counterparts. Throughout this discussion we will also outline how we addressed some of these concerns in Sauce. Afterward, we will explore scoped enums and the task of transitioning Sauce to use them.

Terminology

Before we begin, let’s briefly establish some terminology. An unscoped enum has the following form:

enum IDENTIFIER

{

ENUMERATOR,

ENUMERATOR,

ENUMERATOR,

};

The identifier is also referred to as the “type” of the enum. The list inside the enum is composed of enumerators. Each enumerator has an integral value.

Problem 1: Enumerators are treated as integers inside the parent scope.

Aliased Values

Consider the case where you have two enums inside the same parent scope. Unfortunately, there is no reinforcement by the compiler to say that a given enumerator is associated with one enum over the other. This can cause a couple issues. Here’s an example:

namespace Example1

{

enum Shape

{

eSphere,

eBox,

eCone,

};

enum Material

{

eColor,

eTexture,

};

}

Now let’s see what happens when we try to use these enums in some client code:

const Example1::Shape shape = Example1::eSphere;

if (shape == Example1::eSphere)

printf("SPHERE\n");

if (shape == Example1::eBox)

printf("BOX\n");

if (shape == Example1::eCone)

printf("CONE\n");

if (shape == Example1::eColor)

printf("COLOR\n");

if (shape == Example1::eTexture)

printf("TEXTURE\n");

The code above prints out both “SPHERE” and “COLOR”. This is because unscoped enum enumerators are implicitly converted to integers and the value of shape is 0, which matches both eSphere and eColor.

Sadly, the only workable solution is to manually assign a value to each of the enumerators that is unique within the parent scope. This is far from ideal due to the added maintenance cost.

Enumerator Name Clashes

Additionally, there is a second issue that arises from the fact that enums are swallowed into their parent scope: enumerator name clashes. For instance, consider modifying the previous case to add an “invalid” enumerator to each enum. While this makes sense conceptually, the following code will not compile:

namespace Example2A

{

enum Shape

{

eInvalid,

eSphere,

eBox,

eCone,

};

enum Material

{

eInvalid,

eColor,

eTexture,

};

}

Although enumerator name clashes are not too common, it is generally bad practice to establish coding conventions that depend on the rarity of such situations.

Consequently, this usually forces you to mangle the enumerator names to include the enum type. Modifying the previous example might look something like this:

namespace Example2B

{

enum Shape

{

eShape_Invalid,

eShape_Sphere,

eShape_Box,

eShape_Cone,

};

enum Material

{

eMaterial_Invalid,

eMaterial_Color,

eMaterial_Texture,

};

}

This version of the code will compile, but now the enumerator names look a little weird. Also, it is important to point out that we are now repeating ourselves: the enum identifier and each of the enumerators.

Another way to solve the name clash issue is to wrap the enum with an additional scoping object: namespace, class, or struct. Employing this method will allow us to keep our original enumerator names, which I like. However, it actually introduces a new problem: now we need two names… one for the scope and one for the enum itself.

Admittedly, there are a few different ways to handle this, but for the sake of the example let’s keep things simple:

namespace Example2C

{

namespace Shape

{

enum Enum

{

eInvalid,

eSphere,

eBox,

eCone,

};

};

namespace Material

{

enum Enum

{

eInvalid,

eColor,

eTexture,

};

};

}

While the extra nesting does make the declaration a bit ugly, it solves the enumerator name clash problem. Furthermore, it also forces client code to prefix enumerators with their associated scoping object, which I personally consider a big win.

// in some Example2C function...

const Shape::Enum shape = GetShape();

if (shape == Shape::eInvalid)

printf("Shape::Invalid\n");

if (shape == Shape::eSphere)

printf("Shape::Sphere\n");

if (shape == Shape::eBox)

printf("Shape::Box\n");

if (shape == Shape::eCone)

printf("Shape::Cone\n");

const Material::Enum material = GetMaterial();

if (material == Material::eInvalid)

printf("Material::Invalid\n");

if (material == Material::eColor)

printf("Material::Color\n");

if (material == Material::eTexture)

printf("Material::Texture\n");

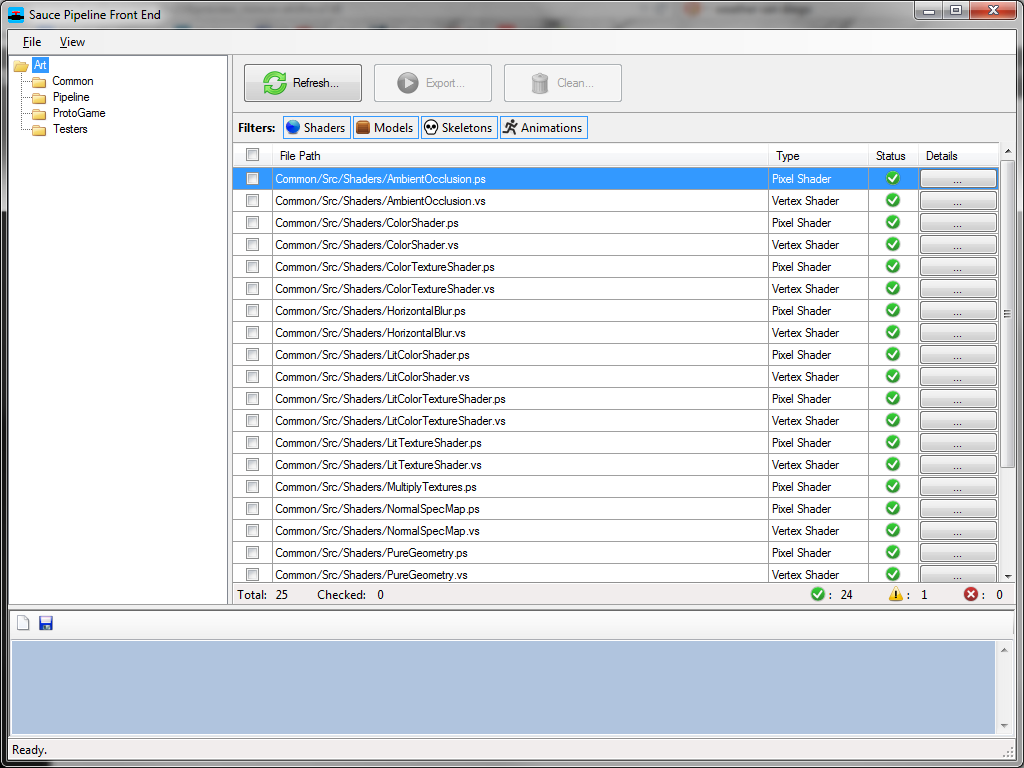

In fact, before the transition to scoped enums, most of the enums in Sauce were scoped this way. Unfortunately, the availability of choices in situations like this breed inconsistency. Sauce was no exception: namespace, class, and struct were all being employed as scoping objects for enums in different parts of the code base (needless to say, I was pretty disappointed in this discovery).

Problem 2: Unscoped Enums cannot be forward declared.

This bothers me a lot. I’m very meticulous with my forward declarations and header includes, but unscoped enums have, at times, undermined my efforts. I also feel like it subverts the C++ mantra of not paying for what you don’t use.

For instance, if you want to use an enum as a function parameter, the full enum definition must be available, requiring a header include if you don’t already have it.

The following is a stripped-down example of the case in point:

Shape.h

namespace Shape

{

enum Enum

{

eInvalid,

eSphere,

eBox,

eCone,

};

}

ShapeOps.h

#include "Shape.h" // <-- BOO!

namespace ShapeOps

{

const char* GetName(const Shape::Enum shape);

}

Unfortunately, there is no way around using a full include with unscoped enums. The situation is even more costly if the enum is inside a class header file that has its own set of includes.

Scoped Enums

Scoped enums were introduced in C++11. I am excited to report that not only do they solve all of the issues discussed above, but they also provide the client code with clean, intuitive syntax.

A scoped enum has the following form:

enum class IDENTIFIER

{

ENUMERATOR,

ENUMERATOR,

ENUMERATOR,

};

That’s right — all you have to do is add the class keyword after enum and you have a scoped enum!

Converting the final example from the last section to use a scoped enum looks like the following:

Shape.h

enum class Shape

{

eInvalid,

eSphere,

eBox,

eCone,

};

ShapeOps.h

enum class Shape; // forward declaration -- YAY

namespace ShapeOps

{

const char* GetName(const Shape shape);

}

Here is an example of client code:

const Shape shape = GetShape();

if (shape == Shape::eInvalid)

printf("Shape::Invalid\n");

if (shape == Shape::eSphere)

printf("Shape::Sphere\n");

if (shape == Shape::eBox)

printf("Shape::Box\n");

if (shape == Shape::eCone)

printf("Shape::Cone\n");

This is exactly what we were looking for all along!

Another advantage of scoped enums is that they cannot be implicitly converted to integers. This solves the enumerator value aliasing we described earlier and is enforced by the compiler.

Transitioning to Scoped Enums

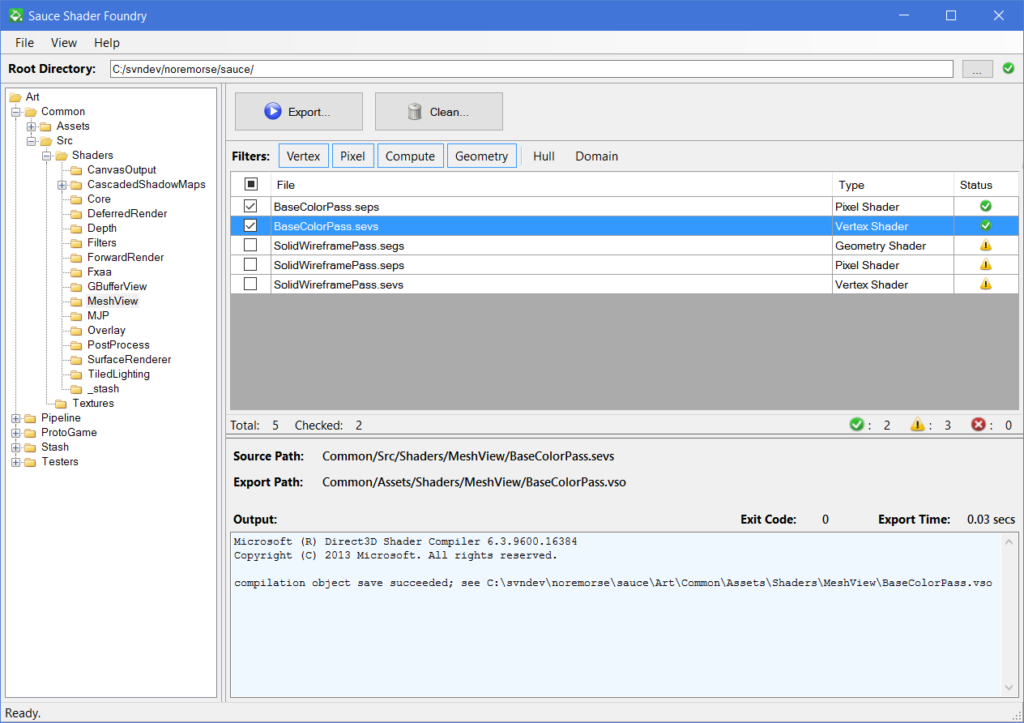

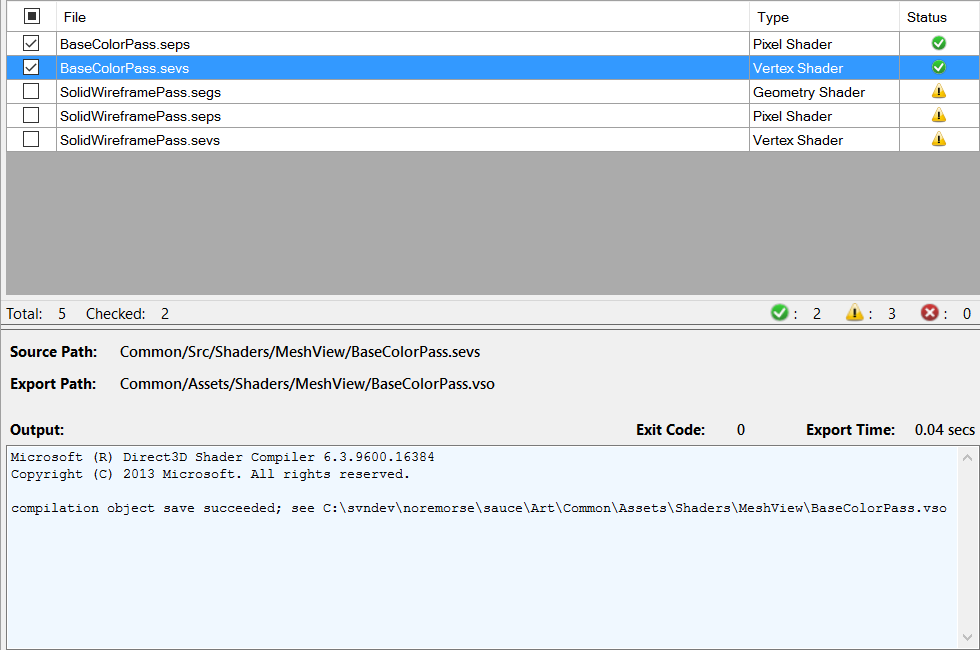

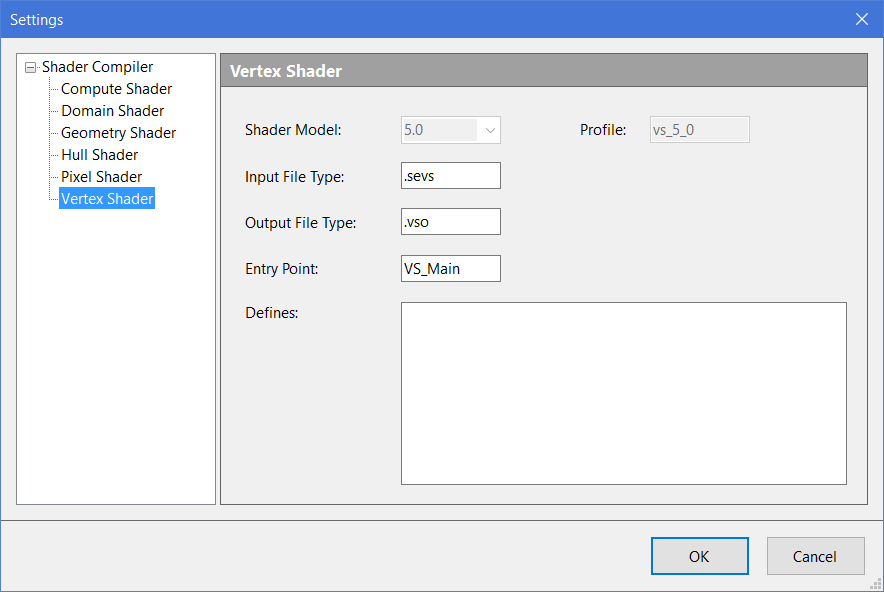

Sauce is a fairly large code base: ~200K lines of code at the time of this writing. It took me a few days to convert 100+ unscoped enums to scoped enums. Due to the fact that I was manually scoping all of the enums, this is not a simple “search and replace” task. Additionally, I spent the extra time replacing includes with forward declarations, when appropriate.

Overall, I strongly believe that the time investment is well worth the time spent. The scoped enum syntax is natural, and the fact that they can be forward declared opens an opportunity to drop your header include count in some places. If you are considering the task of transitioning your legacy code base to scoped enums, I highly recommend it!